[dependencies]

scraper = "0.12.0"

reqwest = { version = "0.11.10", features = ["blocking", "json"] }

surf = "2.3.2"

tokio = { version = "1.17.0", features = ["full"] }

futures = "0.3.21"use futures::future::join_all;

use std::env;

use scraper::{Html, Selector};

async fn fetch_path(path:String) -> surf::Result<String>{

let mut back_string = String::new();

match surf::get(&path).await {

Ok(mut response) => {

match response.body_string().await{

Ok(text) =>{

back_string = format!("{}",text)

}

Err(_) => {

println!("Read response text Error!")

}

};

}

Err(_) => {

println!("reqwest get Error!")

}

}

Ok(back_string)

}

#[tokio::main]

async fn main() -> surf::Result<()>{

let stdin = env::args().nth(1).take().unwrap();

let paths = vec![stdin.to_string(),];

let result_list = join_all(paths.into_iter().map(|path|{

fetch_path(path)

})).await;

let mut list_string:Vec<String> = vec![];

for ele in result_list.into_iter(){

if ele.is_ok(){

list_string.push(ele.unwrap())

}else {

return Err(ele.unwrap_err())

}

}

let v = list_string.get(0).take().unwrap();

// println!("{}",v);

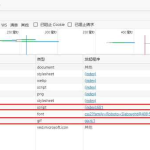

let fragment = Html::parse_fragment(v);

let ul_selector = Selector::parse("script").unwrap();

for element in fragment.select(&ul_selector) {

println!("{}",element.inner_html());

}

// println!("请求输出:{:?}",list_string);

Ok(())

}cargo run -- https://www.baidu.com/